Archive - Short essays, research and random thoughts

Author's note on 2020

Most of the entries in 2020 were about Covid-19. I have not included them here because most seem out of date now.

A theory of oppositional altruism - December 2020

Author’s note: I cannot claim to be the sole author of this piece. The idea was inspired by my 8 year old daughter who recently asked me if misinformation can ever be good for society.

Social media has been used to actively spread misinformation to meet a breadth of pernicious objectives. The spread of health misinformation may be particularly worrisome since it may change behaviours in a way that puts individuals and society at risk.

However, what is the total health impact of health misinformation? Is it possible that some people are motivated to make better health decisions when they encounter misinformation? If they are, then the net negative social impact of misinformation may be less than we think.

For example, when some people read stories about anti-vaccination proponents and vaccine hesitancy they may be more inclined to ensure their vaccines are up to date than they would have been otherwise. This could be because 1) they are concerned that vaccine hesitancy in the population puts them at greater risk of infection and 2) they are motivated to actively oppose misinformation as a way of expressing their personal views and increasing their sense of agency. This latter motivation is similar to what has been seen in heavily contested elections; when an oppositional political movement is particularly detestable it may motivate a larger number of normally politically inactive people to get out and vote.

I propose a theory that some people make socially beneficial decisions as an oppositional response to misinformation that they would not otherwise undertake. I refer to this as oppositional altruism.

The motivation for an oppositional altruist is to make a personally satisfying decision in response to health misinformation. True altruists have a different motivation (they make personal sacrifices for society) but the consequences of both altruists are the same. If oppositional or genuine altruism is a motivating force for individuals, then the resulting behaviours could offset some of the social harms of misinformation.

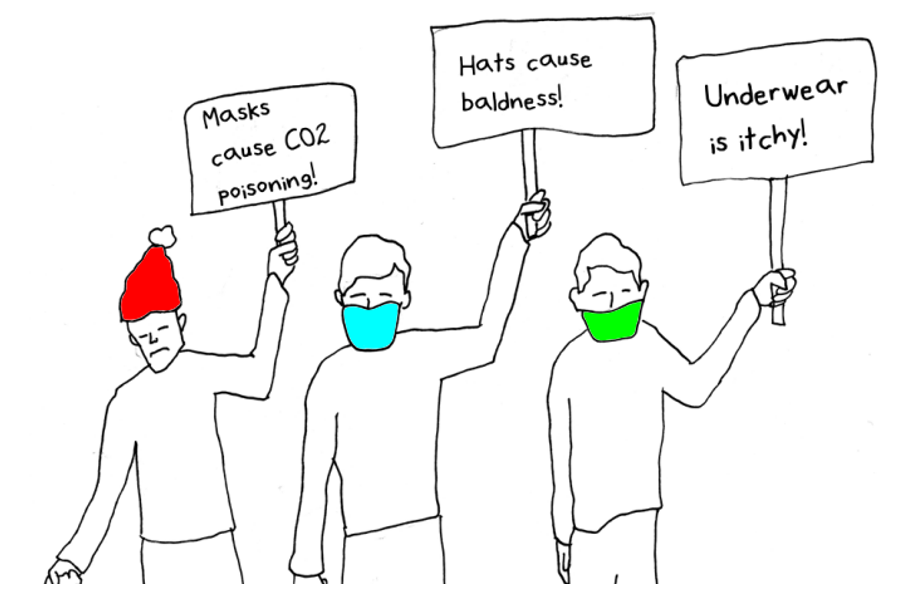

Consider a timely example. Some people publicly (and often loudly) argue that we should not wear masks to reduce the spread of SARS-CoV-2. If masks do offer protection from the spread of infection then not wearing them has a negative social impact—increasing spread of infection and sickness in others—and the message not to wear a mask is misinformation. In response to this misinformation oppositional altruists may be more inclined to wear masks than they would otherwise, offsetting (at least to some degree) the decisions of anti-maskers. Moreover, if there are considerably more oppositional altruists than there are anti-maskers, then the net impact of the misinformation may be more mask wearing overall and less infection!

There is no direct evidence for oppositional altruism that I know of. However, the theory of risk homeostasis offers some evidence from a risk management perspective. The theory says that people try to maintain a tolerable risk level in their life; we take some risks but we then compensate for them by avoiding others. We seek to balance these risks in order to meet a perceived level of target risk that we find satisfying. Some risks that we face occur in the social environment, and when we see these risks increase, we may take personal actions to reduce these and/or other risks to achieve our target risk level. When these personal decisions could also reduce the risks that others experience, it is oppositional altruism.

Oppositional altruism is not guaranteed to offset the harms of health misinformation. However, if people do sometimes behave as oppositional altruists, it could give us reason to be optimistic and hopeful in spite of the abundance and rapid spread of health misinformation. Perhaps the more health misinformation the greater the oppositional altruism—acting as a sort of social risk taking governor that prevents health misinformation from causing runaway social harms.

A paradox of consensus - July 2020

Consider the following. After years of study, researchers estimate with a high degree of certainty that there is a 60% chance of a particular event, (call it A), happening. When asked to make a discrete prediction of whether or not A will actually happen at a moment in time, 100 out of 100 experts independently conclude that the event will happen.

Now consider this. After years of study, researchers estimate with a high degree of certainty that there is a 50% chance of a particular event, (call it B), happening. When asked to make a discrete prediction of whether or not B will actually happen at a moment in time, 50 out of 100 experts independently conclude that the event will happen.

The expert predictions in both of these scenarios are perfectly rational. These independent expert predictions provide the most accurate long-run information about the whether or not A and B will happen. However, in the second scenario the aggregate prediction (e.g., by taking the average) is precisely correct, and the first scenario the aggregate prediction is infinitely wrong.

If you want to see a real world example, consider the predictions of 18 experts on the NHL post season for 2020.

https://www.sportsnet.ca/hockey/nhl/sportsnet-nhl-insiders-2020-stanley-cup-playoffs-predictions/

All 18 experts predict that Pittsburgh is going to win their playoff series. For each expert this prediction makes sense–by most measures, Pittsburgh is the better team. However, this information does not give me a realistic representation of the actual probability that Pittsburgh will win. As bad as Montreal is, they have a better than 0% chance of winning the series.

In contrast, if we sum the total number of experts predicting New York will win and divide it by the total number of predictions, New York is given a 56% chance of winning their series. This number is probably a pretty good long-run estimate of the probability that New York will win the series. There is no consensus, and that actually yields a more realistic aggregate prediction!

What this quasi-paradox suggests is that the closer experts are to a consensus about an event, the more likely we are to get a bad aggregate prediction of the true probability of an event. If we combine the expert predictions, we will think that the event is more (or less) probable than it actually is.

This is a reminder of why when consulting an expert, we should not ask if something will happen, but instead ask about the probability that something will happen. Among other things, this probability is something we can average across experts to get a sort of ‘meta’ prediction.

It is also a reminder not to mistake an expert consensus about an event as equivalent to a guarantee that the event will happen.

Note August 2021: Almost the EXACT SAME prediction mistake was made in 2021--all sportsnet analysts predicted Boston would beat Montreal in the 1st playoff round, but they didn't!